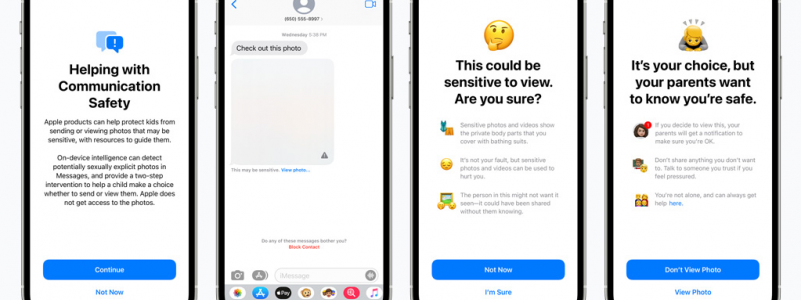

Apple hit the headlines a month ago when it announced the launch of its new child safety feature against child abuse material. The new feature was supposed to screen all the upcoming Apple devices for child sexual abuse material (CSAM). However, the company had to hit the brakes on the launch after privacy concerns and backlash by Apple’s user base and critics started pouring in.

“At Apple, our goal is to create technology that empowers people and enriches their lives — while helping them stay safe. We want to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material (CSAM),” Apple announced.

The announcement of the CSAM scanner was not well-received by the public. Its unveiling sparked debate on how it can be used for mass surveillance.

Due to the feedback they received from users and various advocacy groups, Apple has decided to postpone the launch of the CSAM scanner feature. The company will take the necessary time to make the changes necessary to improve the feature and make it better and beneficial for all.

Although considerable dilemma and criticism surround Apple’s CSAM scanning feature, what many people don’t know is that many tech companies like Google and Facebook are already scanning their files for CSAM. The tech giants scan all the images uploaded on their server to look for CSAM. This comes under the standard cyber safety measures that help them create a safe environment for their users.

Apple’s CSAM scanning feature was initially supposed to be launched with iOS 15 and macOS Monterey by the end of this year. However, after the recent developments, the feature’s revised launch date and model have not been declared yet.